When “Responsible AI” Becomes a Wall Instead of a Guide

When “Responsible AI” Becomes a Wall Instead of a Guide

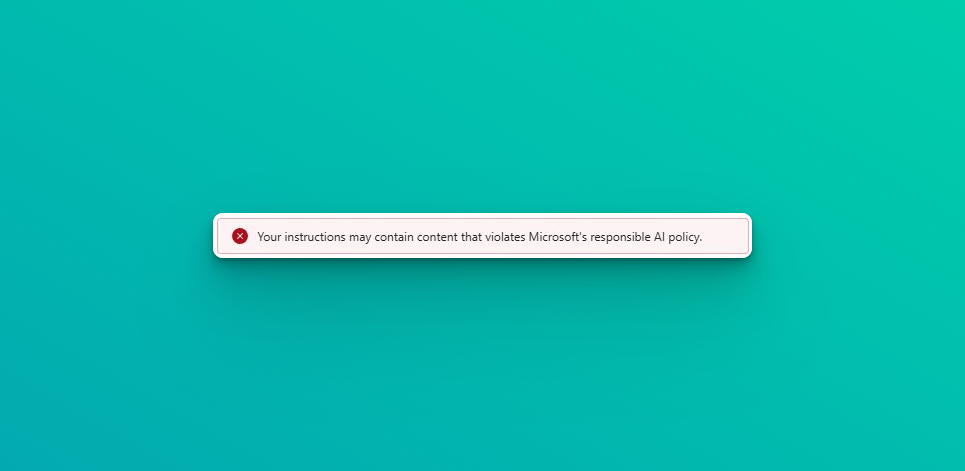

The other day, I ran into this delightful message:

“Your instructions may contain content that violates Microsoft’s responsible AI policy.”

That’s it. No explanation. No hint of what part is problematic. Just a big red warning, suggesting that somewhere, somehow, I might be about to do something bad.

The Cynicism of Corporate Responsibility

Now, I’m not against the idea of “responsible AI.” Quite the opposite. Of course, AI needs guardrails. But here’s where the cynicism kicks in: if a trillion-dollar, shareholder-value-driven company gets to define what “responsible” means—without transparency or clarity—then we’re left in a strange place.

In my case, the alleged violation was a tiny phrase in a system prompt: “and end with an emotional plea to [emoji].” That’s what triggered the red box. Seriously. If that’s the standard of harm prevention, I think we’re mixing up ethics with automated word policing.

Schrödinger’s Violation

The irony? Responsible AI should empower people to understand why something is blocked, and to reflect on whether that’s appropriate. Instead, we get vague alerts like “may contain content.”

May. Might. Possibly.

It’s Schrödinger’s violation—simultaneously allowed and forbidden until a corporate filter decides otherwise.

A Pacific Northwest Moral Compass

And here’s the uncomfortable truth: I don’t think it should be Microsoft—or any US-based tech giant for that matter—that sets the global moral compass. These are companies built on shareholder value. Their definition of “responsibility” will always carry that DNA.

When I say “US-based,” I mean it literally: chances are high that a product manager in the Pacific Northwest decides what is “ok” or “not ok” for a global audience. That decision is then baked into tools millions of people use worldwide. But what fits the cultural context of Seattle doesn’t automatically translate into Delhi, Vienna, or São Paulo. And yet, in the global situation we’re in right now, it means exactly that: someone from the US defines the guardrails for everyone else.

And honestly—if we look at how they deal with multi-language, I’m not sure the multiverse of the globe is really reflected in their moral positioning.

The Political Moment

And if we zoom out for a moment: with everything happening in the world politically right now, isn’t it even more dangerous to let a single source define the “ethics” of a technology? Our societies are polarized enough already. Different world regions struggle with different truths, different narratives, different wounds.

Handing over the role of global ethics referee to a single corporate entity—sitting safely in one corner of the world—feels naïve at best.

Pandora’s Box

And then there’s Pandora’s box: why does a company like Microsoft even need to tell us that AI should be used “responsibly”?

Usually you see that kind of disclaimer on alcohol bottles, gambling apps, or payday loan ads—industries that know their products can do real harm if abused. Is that the category Microsoft wants AI to be in? And what’s their true intent? To genuinely protect users—or to shield themselves from accountability?

Where Responsibility Belongs

The world needs more diverse and independent places to negotiate what “responsible” really means.

- Governments

- Communities

- Academia

- Civil society

The messy, sometimes slow, but much more legitimate conversations about what we value as humans.

Until then, we’ll continue to see red error boxes that “might” be violations—without ever telling us of what.

*Note: AI was used to polish the English in this post, but not the arguments. As a non-native English speaker, I often feel my thoughts lose some impact when expressed in English compared to German. This way, the words are clearer — but the perspective remains mine. And honestly, that polishing is important: otherwise I risk coming across as too opinionated as German speakers often do or way too full of cynicism as the Austrian I am.